This is a serious problem.

A month ago Munro realizes bias in AI is bad, as you can see in his tweet above. And suddenly Munro is the leading voice in a NYT story on it?

Cade Metz appears to be a white man at the NYT who reached out to another white man, Dr. Munro. They then discuss bias in AI for Munro’s new interest and future book.

Was there any point that either of them thought maybe someone who isn’t like them, someone who isn’t a white man and also who has been doing this a long time, could be the lead voice in their story about bias in AI?

Let’s dig in.

The transition in the story itself is so remarkably tone-deaf to itself, it’s hard to believe it is real.

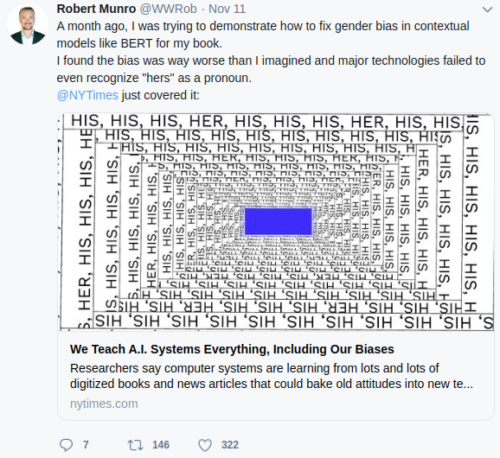

BERT and its peers are more likely to associate men with computer programming, for example…. On a recent afternoon in San Francisco, while researching a book on artificial intelligence, the computer scientist Robert Munro fed 100 English words into BERT: “jewelry,” “baby,” “horses,” “house,” “money,” “action.” In 99 cases out of 100, BERT was more likely to associate the words with men rather than women. The word “mom” was the outlier. “This is the same historical inequity we have always seen,” said Dr. Munro, who has a Ph.D. in computational linguistics and previously oversaw natural language and translation technology at Amazon Web Services.

- Why does the author think we should be happy to go from “more likely to associate men with computer programming” straight to here’s a man to talk about it? It’s like the NYT writing “mispelungs are a problem in communication”. So, how about don’t do that thing you’re saying is bad? Or at the very least setup an example, like Munro could have deferred to a black woman and said “I’m new to this and confirming what’s been said, so let’s ask her”.

- There are many books already written about this by people of diverse backgrounds. Why talk to someone still in research phase, and why this white man? Massachusetts Institute of Technology researcher Joy Buolamwini is such an obvious resource here. Or Yeshimabeit Milner, founder and executive director of Data for Black Lives, or MacArthur “Genius” award recipient Jennifer Eberhardt who published “Biased“, or Margaret Hu writing about a Big Data Constitution, or Caroline Criado Perez who published “Invisible Women“, or Renée Cummings at Columbia…come on people, there’s even a searchable database.

- 100 English words is barely a talking point, so why is it here? Even I have done more research than this in my Big Data ethics classes over the past five years. We literally fed hundreds of words from dozens of languages into algorithms to break them. I’ll bet my students from diverse backgrounds would be the better sources to quote than this one white man feeding “horses, money, baby, action” into any algorithm new or old. Were the rest of the words on his list like “bro, polo, golf, football, beer, eggplant, testicles, patagonia…”? Perhaps we also should be asking why he thought to test whether horses, baby and jewelry would associate more with women than men? Does mom, which is so obviously not male, serve as an outlier more in terms of his own life choices?

- “This is the same historical inequity we have always seen..” is a meaningless history phrase. Why can’t jewelry be associated with men? Historical inequity seen where? By who? Over what period of time?

- Then I noticed…”previously oversaw natural language and translation technology at Amazon Web Services.” A quick check of LinkedIn revealed “Principal Product Manager at AWS Machine Learning, Sep 2016 – Jun 2017…I led product for Amazon Comprehend and Amazon Translate…the most senior Product Manager within AWS’s Machine Learning team”. Calling oneself the most senior product manager on a team usually means someone above was the overseer, not him. And even if we give benefit of doubt, he was last there in 2017 and only sat 10 months. It’s a stretch to hold that out as his priors. Why not speak to his recent work, lack of focus on this topic and the reason his bias story from just a month ago makes him so relevant?

None of this is to fault Munro entirely for answering the call of a journalist. Hey, I answer calls about ethics all the time too and I’m a white man.

His response, however, could have been to get the journalist oriented more towards leading his story with people who already have released their books (as in how I discuss “Weapons of Math Destruction”); help NYT represent topics of bias fairly even though “more likely to associate men with computer programming”. Seems like missed opportunity to avoid repeating known failures.

And if that isn’t enough, the article gets worse:

Researchers have long warned of bias in A.I. that learns from large amounts data, including the facial recognition systems that are used by police departments and other government agencies as well as popular internet services from tech giants like Google and Facebook. In 2015, for example, the Google Photos app was caught labeling African-Americans as “gorillas.” The services Dr. Munro scrutinized also showed bias against women and people of color.

Oh really, Google was caught? Well do tell, who caught it then? Was it some non-white person who will remain without credit?

Yes. I’ve spoken about this at conferences many times, citing those people and the original work (i.e. instead of asking a white man from Stanford to give me their opinion).

Click to enlarge.

What are the names of researchers who have long warned of bias? Were they women and people of color?

Yes. (See names above)

Yet the article returns to Munro for his Stanford-educated white man, 10 months at AWS and researching a new book, opinions again about women and people of color.

Wat.

We can do so much better.

Earlier and also in the NYT, a writer named Ruth Whippman gave some advice on what could be happening instead.

Use your platforms and your cultural capital to ask that men be the ones to do the self-improvement for once. Stand up for deference. Write the book that teaches men to sit back and listen and yield to others’ judgment. Code the app that shows them where to put the apologies in their emails. Teach them how to assess their own abilities realistically and modestly. Tell them to “lean out,” reflect and consider the needs of others rather than assertively restating their own. Sell the female standard as the norm.

If only Cade Metz had read it before publishing his own piece, he might have started by asking Munro whether — realistically and modestly speaking — there would be better candidates to feature in a story about bias, such as black and brown women already published and long-time active thought leaders in the same space. Maybe he did ask yet still decided to run Munro as lead in the story, and that would be even worse.