As I started my career in computer security in the 1990s I felt like I was quite late to the party; had to catch up to the many experts who brought deep experience over decades of practice.

Instead I’ve realized over time that showing up late doesn’t mean that you aren’t also, at the same time, relatively… early.

It’s thus a bit surreal to have been in the field of misinformation (and disinformation) for so long because I keep hearing that it’s a *new* problem.

“It’s always a good time to invest in a good thing” as a U.S. Green Beret trained to blow up bridges in Ethiopia once told me.

With that in mind, here are some thoughts about data integrity when looking back, which maybe will help others who just now are looking forwards at misinformation.

Relatives and relativity

Relativity is fundamental to understanding integrity of information. Absolutism is not incompatible, however, with relativity. “We can be in different time zones yet still share the same concept of time” as my father once told me.

Indeed, what we believe often derives from our family. This is why racism (one of the most insidious forms of disinformation) tends to be something taught by parents. For example, naming a child Jefferson Beauregard Sessions III (after treasonous pro-slavery leaders) or even the awful Robert Lee shows how American families still openly spread false beliefs (racism) and refuse to stop disinformation.

A recent study highlights that fear tends to correlate to susceptibility to misinformation, which helps show why racist groups troubled by their own “survival” are so naturally oriented towards the stuff.

…words related to existentially-based needs (i.e., discussing death and religion frequently) is useful to distinguish who will eventually share fake-news…

Back in 2008 I wrote another simple explanation that has stuck with me ever since.

…they actually become more convinced they are in the right when evidence starts to challenge them…

Studying political science really helps tease apart the complexity of such an information integrity problem, as it demystifies power relationships that influence fear. Labels like “terrorist” get measured as relative as well as absolute terms, because beliefs originate in many varied perspectives.

“Havens of terror” for one group might be thought of as safe-houses (where freedom fighters gather to help end racism) for another group. I’ve written about this here before, using encrypted communications of an anti-apartheid movement as an example.

I’m reminded of such complexity every time I sip a glass of Knut Hansen.

They asked London. London said immediately, ‘Sink it.’ And they did what they were told. It would be like asking me to blow up the 8:45 train to London. I’d be absolutely certain there’d be friends, maybe even relatives of mine on that train, but if there’s any chance that Hitler’s going to get an atomic bomb, what else can you do?

Complexity and expertise

Rarely, if ever, do topics get boiled down into things that are easy, routine and have minimal judgment (ERM). Even the simple aspirin, as an ERM exercise in taking a pill, comes with complex warnings and dangers.

Thus people must turn to experts who can identify, store data, evaluate and adapt or analyze (ISEA) to make recommendations. Who is an expert? Why should we trust one person skilled with ISEA over another?

One of the best examples of this problem is Google. The founders took an old Beowulf cluster concept of inexpensive distributed computing (lots of power for little money), dumped every web page onto this cluster, then mixed in an ancient academic peer review (an open market of expertise) as a way to provide value.

In theory all the ideas on the web were meant to be filtered for integrity, such that “relevant” or “best” results would come from a simple word query (search).

This is obviously not how things turned out at all.

Google’s engine was immediately rife with horribly toxic misinformation. Human expertise was injected as a correction. With relativity in mind (see the first point above) a bunch of privileged white guys in fact made matters even worse.

My first encounter with racism in search was in 2009 when I was talking to a friend who causally mentioned one day, “You should see what happens when you Google ‘black girls.'” I did and was stunned.

Google capitalized on complexity with false representations of expertise. Powerful elites generated huge profits despite obvious failures in integrity because, when you really look into it… that’s the history of Stanford.

Did you know? I mean seriously, did you have any idea how horrible a human Stanford was?

Decisions and decisions

We all know how ERM feels far better than an ISEA when trying to figure out what to do.

Think of it as “should I take an aspirin, yes or no” versus “given this long list of symptoms and collection of samples from my body what are my best options for feeling better”.

Has anyone ever gotten frustrated with you when you lay out the details and they cut you off and say “get to the point” or “just tell me what to do”?

In real everyday terms people prefer moving ahead on known paths because they are under all kinds of pressure and distractions. Those who go about making decisions tend to be in a completely different mindset than those who are doing deep study and investigation of integrity and truth.

Engineering teams run into this problem all the time as those closest to a problem may struggle to articulate it in a simple way that decision makers can jump on. “It depends” might work for lawyers who can leave decisions to unpredictable juries, but for most people they want things boiled down to simpler and simpler options.

Yes and no questions require less effort than wading through a long list of warmer and colder ones, yet binary is a very low-integrity way to represent the world let alone handle risk.

The Shuttle disaster was a case (perhaps most famously explained by Tufte as a visualization failure) where engineers knew temperatures caused different/unknown safety for launch. They couldn’t express that in a binary enough way (DO NOT LAUNCH) to prevent disaster. NASA struggled to make ISEA into a decision that was ERM: temperatures below X will destroy everything.

Bending and breaking

There are many ERM in life and we benefit from using them all the time. Routines bring joy to life (a quick snack of good food that we recognize and like) by reducing burden. Being reliably rested, clean and fed means we are ready to expand and explore so many other areas of life.

However, routines are subject to challenge and change. Unexpected details come and turn our simple routines back into complex problems, forcing a shift from rigid to flexible thought.

A serious problem with this was discussed extensively by philosophers in the 1700s. Perhaps Mary Wollstonecraft put it best when she wrote that women would be as successful and intelligent as men if allowed a similar education. She argued successfully what ought to be was different than what is.

Her words sound true today because we have so much evidence to prove her right. However, for centuries past (let alone 1900s if we’re talking about America) men used a strain of philosophy now known as “positivism” to argue their lack of any observation (sensory experience) of women being equal to men was proof of what will be.

Confirmation bias is often how we talk about this sort of inflexibility today. People unwilling or unable to shift from ERM towards the hard work of ISEA — or be open to someone else using ISEA to deliver a new trusted ERM — opens the door to dangerous social engineering even among experts.

Trust and digital ethics

In the final paragraph of the section above I mentioned trust. It all comes down to this because data integrity is a matter of having systems that can establish and deliver trust through relationships, across boundaries and gaps.

Systems today are high technology and thus ethics shifts into study of digital ethics, but it’s still ethics even though more often we call it computer security (identity, authority, accountability).

When technology causes some “domain shift” (e.g. from ox and plow to diesel tractor) the ethical foundations (e.g. morality and adherence to rules) should not be discarded entirely as though trust has to be completely rebuilt every time a wheel is reinvented. Such destructive habits undermine integrity at a very significant level and the next thing you know a CEO is a serial liar and selling snake-oil to become the richest man on the planet.

This doesn’t have to be the case at all.

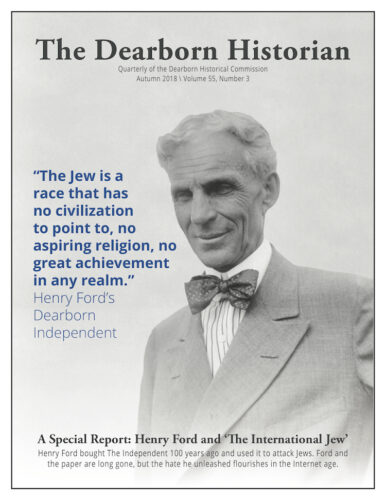

Misinformation such as racism using different media, whether it be from Elon Musk using his Twitter software or Henry Ford using his Ford International Weekly newspaper… isn’t as novel as people pretend (often in an attempt to escape past regulation and reason).

In fact changing the media doesn’t change the game at all. Old methods of misinformation (and disinformation) still work because new technology mostly speeds them up. Of course old counter-arguments remain valid as well, if we know what they are and how to achieve similar greater velocity.

Integrity of information brought decisive influence on the battle-fields of world-wars (e.g. Beersheba in 1917) all the way to more modern regional ones (Cuito Cuanavale, Angola in 1987). Take for example the lessons learned when a hot-headed sycophant Nazi General Rommel by 1942 had become an easily predictable disaster. He was fooled by disinformation campaigns, while his own attempts at propaganda became the laughing stock of the world.

I tend to weave in history like this because success in great battles of the past can and will inform success in our present and future ones, even if they don’t repeat exactly.

The best General in American history, who went on to be the best President in American history, put it something like this: if we were fighting Napolean I would trust men more who knew how to fight Napoleon. Instead Grant built trust from a constantly updating analysis of integrity threats to the country. His ability to always update and change for the better was what made him so amazing.

Who can forget for example Grant heaped criticism upon the traitorous General Bragg while at the same time praised a Confederate Longstreet as a man of great integrity.

“Longstreet was an entirely different man [from Bragg]. He was brave, honest, intelligent, a very capable soldier…just and kind to his subordinates, but jealous of his own rights.”

Grant was spot on. General Bragg arguably was the worst strategist in the Civil War if not the worst human being (a place in history hard to achieve given it was next to the atrocious General “butcher” Lee).

Brutal slaveholder, miserable to his own troops and hated by all is Bragg’s legacy. Then what could be the explanation of how or why such an obvious odious enemy of America had his name plastered boldly over a major U.S. Army base? Disinformation.

Ask any American why Civil War losers were able to write history in such an uncontested manner.

Their answer should be that in 1918 (the second rise of the KKK as led by President Woodrow Wilson, nudge nudge) a domestic terror group came up with Fort Bragg as information warfare.

Even more to the point, proving this wasn’t some small misunderstanding, Fort Bragg had its streets named for more and more sworn enemies of America: Alexander, Armistead, Donelson, Jackson, Mosby, Pelham and Reilly.

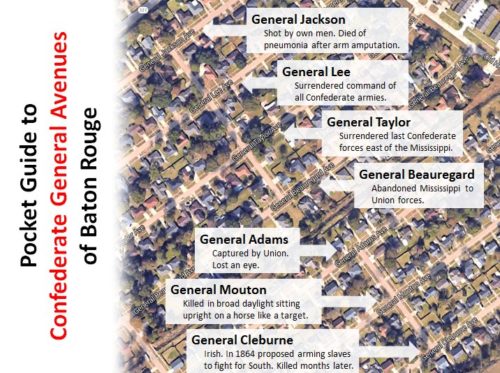

I’ve made light of this pervasive problem before, even creating a mock tourism guide.

Hint: America is all about losers writing and promoting history in a long game to perpetuate its racist origin story into a permanent power doctrine (e.g. white police state).

It is a country rife with unregulated disinformation nearly everywhere. American kids learn all about a myth of Washington instead of reality from Robert Carter. They learn a myth of Custer instead of reality from Silas Soule.

In that sense we should all be looking at past disinformation in order to see better forward. Many of the brilliant President Grant’s sentiments in the 1800s still seem critical for accurately predicting how to move Americans today from what is to what should be.

He was a clear-thinker far ahead of his time, much like Wollstonecraft, and had many insights in how to handle misinformation and disinformation — data integrity in the 2020s — through regulation. When we think of trust as an ancient problem of ethics it helps highlight solutions just as old.

As a final thought on finding and trusting experts, always beware a technical domain “expert” who over-emphasizes newness of misinformation, or who downplays it entirely in favor of flogging confidentiality software.

While fetishistic absolutist confidentiality promoted by libertarian slush funds such as the EFF can get a lot of attention (e.g. end-to-end encryption everywhere) it also can and does dangerously harm data integrity; that leads directly to loss of life. Safety must come before privacy in many cases. It seems people finally are starting to see how obsession with “crypto” and “cryptography” often fails the most basic digital ethics tests, yet it should have been obvious to anyone trying to establish meaningful trust in systems.

I hope this post has helped cover some key areas of digital misinformation, and shown how study of past information conflicts may help avert present and even future suffering.